COPE Guidelines for the Editors and Publishers of Scientific Articles Having Tortured Phrases Martened the Nature of Nature and Implications for Research Integrity

It’s important that the results don’t show up in the scientific literature. No one would want their reasoning to be based on false premises. The scientific community wouldn’t accept a treatment if it were based on unreliable clinical trials, and artificial intelligence doesn’t want researchers, the public and the public to rely on unreliable data.

Since my colleagues and I reported that tortured phrases had marred the literature2, publishers — and not just those deemed predatory — have been retracting hundreds of articles as a result. Springer Nature has removed more than 300 articles from its site due to nonsensical text.

Publishers have to update their practices and add more resources to both the editorial and research-integrity teams.

Journals should also contact researchers who reviewed an article that went on to be retracted on technical grounds — for their own information and, if the technical issue is in their area of expertise, to prompt them to be more cautious in future.

We propose that changes to how COPE operates could benefit readers, along with updating its guidance. We and others have, in the past, requested assistance from COPE in resolving integrity concerns that we felt had been inadequately addressed by journals and publishers, only to find responses slow — often taking several months and more than one enquiry — opaque and unhelpful. Some correspondence was not responded to, but some responses acknowledged problems that weren’t followed up on.

They should also “unmistakably” identify retracted articles, as stated in the guidelines from the Committee on Publication Ethics (COPE; see go.nature.com/4dh7fdg). The watermarked banner is included in the article PDF file. But any copy downloaded before a retraction took place won’t include this crucial caveat.

To help independent tools such as the Feet of Clay Detector to harvest data on the current status of articles in their journals, all publishers should publicly release the reference metadata of their entire catalogue.

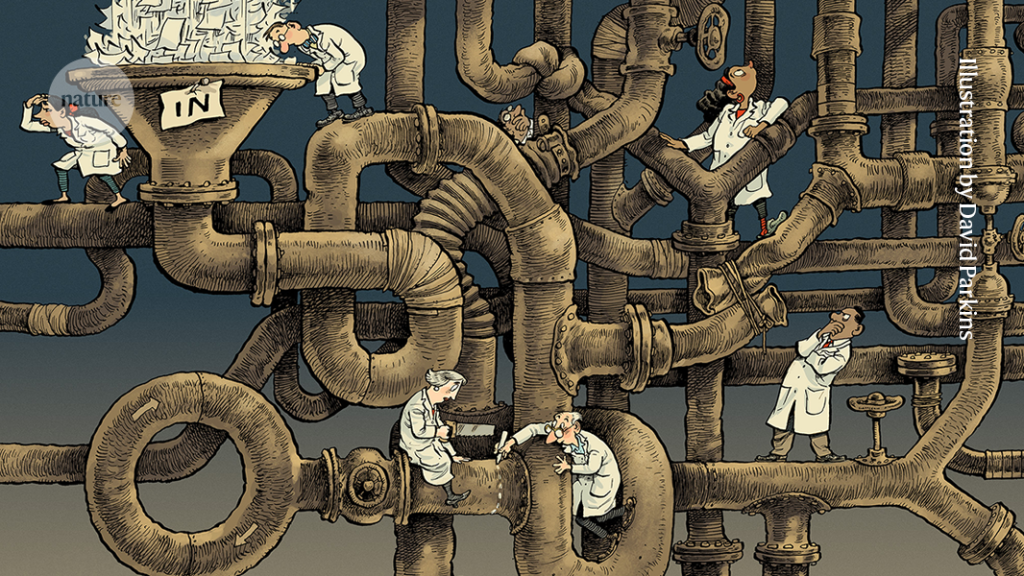

Publishers can change their practices and processes. They should run submitted manuscripts through tools that check them for plagiarism, doctored images, tortured phrases, retracted or questionable references, non-existent references that are wrongly generated by Artificial intelligence and citation plantations, which benefit certain individuals.

Editors, co- authors, referees and type Setters should keep an eye out for unnatural phrases in articles. They can expose text that has been generated by artificial intelligence or by an elaborate form of copy-and-paste that uses a translation tool to make phrases unrecognizable to plagiarism-detection tools.

The other is the Feet of Clay Detector, which serves to quickly spot those articles that cite annulled papers in their reference lists (see go.nature.com/3ysnj8f). I have added online PubPeer comments to more than 1,700 such articles to prompt readers to assess the reliability of the references.

Before including a reference in a manuscript draft, authors should check for post-publication criticism and retractions.

How to Get Your Publications Notice before submitting Your Article: The Role of Torture in Publishing and the Digital Library: A Primer for Authors and Researchers

Two PubPeer extensions are instrumental. If a paper has received comments on PubPeer, one plug-in flags it, which can include corrections and retractions. The other works to identify similar articles in a user’s digital library. For local copies of downloaded PDFs, the publishing industry uses Crossmark: readers can click on the Crossmark button to check the status of the article on the landing page at the publisher’s website.

Yet, even published articles that are riddled with dozens of these ‘tortured phrases’ are slow to be investigated, corrected or retracted. The Problematic Paper Screener that I launched in 2020 has flagged more than 16,000 papers for use of tortured phrases and only 18% of them have been retracted.

In February 2021, I launched the Problematic Paper Screener (PPS; see go.nature.com/473vsgb). This software originally flagged randomly generated text in published papers. It now tracks a variety of issues to alert the scientific community to potential errors.

There’s more than one avenue to question a study after publication, like commenting on PubPeer, where a lot of papers are being reported. Almost all of the comments on the articles received on PubPeer were critical. The authors of a criticized paper aren’t obliged to respond, and publishers don’t monitor them. It is common for post-publication comments, including those from eminent researchers in the field, to raise potentially important issues that go unacknowledged by the authors and the publishing journal.

And journals are notoriously slow. The process requires journal staff to engage in a conversation between authors of the paper that has been criticized and everyone else, including data and post-publication reviewers. Most investigations can take months or years before the outcome is made public.

If a paper is suspicious, scientists can contact the editorial team of the journal in which it appeared to flag it. It’s hard to find a way to raise concerns and who to do it with. Furthermore, this process is typically not anonymous and, depending on the power dynamics at play, some researchers might be unwilling or unable to enter these conversations.

Paper mills have popped up to take advantage of the system. They make, sell and engineer fake manuscripts that are based on made up data, as well as authors and citations.

A researcher’s performance metrics — including the number of papers published, citations acquired and peer-review reports submitted — can all serve to build a reputation and visibility, leading to invitations to speak at conferences, review manuscripts, guest-edit special issues and join editorial boards. This can give more weight to job or promotion applications, be key to attracting funding and lead to more citations, all of which can build a high-profile career. Some scientists who publish a lot, are highly cited and attract funding are welcomed by institutions.

Over the past few decades, article retractions have increased in number and severity, reaching a new high of more than 14500 last year, compared with less than 1000 per year before 2009.

How fast should we contact institutions? An analysis of the false data proportion in the published clinical-trials report by Anaesthesia and recommendations from the UK Parliament

The assessment of clinical-trial reports sounded alarm bells in 2020. Out of 153 reports submitted to a single journal, Anaesthesia, 44% were found to contain false data1. This high proportion — which tallies with other estimates of the percentage of randomized controlled trials in medical journals that are unreliable — reveals a wealth of dubious publications in the public domain. Such papers can hurt clinical practice and change the direction of future research.

The recommended time frames for contacting institutions can cause delays. Take plagiarism, for instance — if neither author nor institution responds to concerns, a COPE flowchart recommends that journals “keep contacting the institution every 3–6 months”, potentially creating an endless loop. Institutions struggle to provide timely, objective and expert assessments and focus a lot on perceived malfeasance by their employees. They may want to protect their reputations.

Time frames are needed for decision-making. For example, a 2023 report on research integrity by the UK Parliament’s Science, Innovation and Technology Select Committee proposed that decisions about publication integrity should ideally be made within two months (see go.nature.com/3y4r2bq). The proposal was made on the basis of feedback from funding bodies, universities and researchers. In practice, we consider six months a reasonable time frame, with editorial actions undertaken immediately after the process is completed.

The recommendation should include journals collecting and reviewing key study information at the manuscript-submission stage. Details of ethical oversight and institutional governance could be assessed by staff before peer review and statistical advisers could check raw data. The checks would make the number of papers that need to be assessed less.

Efficient and transparent responses from COPE would reassure readers that requests for assistance are being taken seriously, provide readers with an opportunity to point out inadequate journal responses and improve the efficiency of journal assessments.

Critics might argue that a focus change would make it less likely that something bad would happen. The reasons for compromised integrity can be examined, after identifying integrity issues and correct the literature.

We have never been aware that COPE has never applied sanctions to its members. The use of sanctions, and making that action public, would probably improve performance of journals and publishers.

Journals and publishers might argue that these recommendations would involve a lot of work. It is still worth doing even if it might seem daunting. Quality control should be invested in some of the profits from the publishing industry.