Defending Islamic State Attacks with New Social Media: The Case for the Narrowing of Section 230 to protect Users’ Online Interactions

The Supreme Court was urged by a social media platform to allow for Section 230 to be narrowed so that users would no longer be able to sued for their online interactions.

The two cases could result in a fundamental change to how platforms can recommend content, particularly material produced by terrorist organizations. Both stem from lawsuits claiming that YouTube, Twitter, and other platforms provided support for Islamic State attacks by failing to remove — and, in some cases, recommending — accounts and posts by terrorists. Section 230 of the Communications Decency Act shields websites from liability for illegal content and Gonzalez is claiming that should not apply to these recommendations. Twitter v. Taamneh covers a distinct but related question: whether these services are providing unlawful material support if they fail to kick terrorists out.

The petition for Supreme Court review argued that the videos that the users viewed onYouTube were the primary method in which the group gained recruits from areas other than Syria and Iraq.

The First Amendment or the First Amendment? The Dispute on Section 230 of the Communications Decency Act (Conspiracy for Big Tech and the Internet)

At the center of two cases to be argued over two days is Section 230 of the 1996 Communications Decency Act, passed by Congress when internet platforms were just beginning. Section 230 divides computer service providers from other sources of information. Whereas newspapers and broadcasters can be sued for defamation and other wrongful conduct, Section 230 says that websites are not publishers or speakers and cannot be sued for material that appears on those sites. Essentially, the law treats web platforms the same way that it treats the telephone. Like the phone company, websites that are host to speakers can’t be sued for what they say.

The law is critiqued on both sides of the aisle. Many Republican officials allege that Section 230 gives social media platforms a license to censor conservative viewpoints. Prominent Democrats, including President Joe Biden, have argued Section 230 prevents tech giants from being held accountable for spreading misinformation and hate speech.

To put it bluntly, the First Amendment doesn’t work if the legal system doesn’t work. The exception to free speech doesn’t matter if people are censured for serious violations or if verdicts are a vestigial part of cases for clout. If the courts don’t take it seriously, it’s useless.

Lawmakers have turned a cultural backlash against Big Tech into a series of glib sound bites and election-year political warfare. Scratch the surface of supposedly “bipartisan” internet regulation, and you’ll find a mess of mutually exclusive demands fueled by reflexive outrage. Some of the people most vocally defending the First Amendment are the ones most open to dismantling it — without even admitting that they’re doing so.

Conservatives have been critic of Section 230 for years because they say it allows social media platforms to suppress conservatives for political reasons.

The First Amendment and the Law of First Amendment: Where are we going? What do we need to do? How do social media platforms should respond when politicians complain?

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

The law was passed in 1996 and since then courts have interpreted it. It effectively means that the internet can’t be sued for displaying someone else’s illegal speech. The defamation law was passed after a couple of cases, but it didn’t cover everything. In addition, it means courts can dismiss most lawsuits over web platform moderation, particularly since there’s a second clause protecting the removal of “objectionable” content.

The thing is, these complaints get a big thing right: in an era of unprecedented mass communication, it’s easier than ever to hurt people with illegal and legal speech. But the issue is far bigger and more complicated than encouraging more people to sue Facebook — because, in fact, the legal system has become part of the problem.

Clarifying false claims about science doesn’t mean companies should remove them. There is a good reason why the First Amendment protects shaky scientific claims. Researchers and news outlets might be sued for publishing good-faith assumptions about covid that have later been proven to be incorrect, like covid not being airborne.

Removing Section 230 protections is a sneaky way for politicians to get around the First Amendment. Without 230, the cost of operating a social media site in the United States would skyrocket due to litigation. Legal content could make sites face lengthy lawsuits if they are unable to invoke a straightforward 230 defense. And when it comes to categories of speech that are dicier, web platforms would be incentivized to remove posts that might be illegal — anything from unfavorable restaurant reviews to MeToo allegations — even if they would have ultimately prevailed in court. Money and time would burn in different ways. It’s no wonder platform operators do what it takes to keep 230 alive. When politicians gripe, the platforms respond.

Why Social Media Has Left Me So Bad, But It Hasn’t Left Me (So What?), and What Have I Learned About John Depp and Amber Heard?

It is not clear whether it matters. Jones declared corporate bankruptcy during the procedure and left Sandy Hook families struggling to chase his money for more than a year. He treated the court proceedings contemptuously and used them to hawk dubious health supplements to his followers. Legal fees and damages have hurt his finances, but the legal system has failed to change his behavior. It gave him another platform to proclaim himself a martyr.

Contrast this with the year’s other big defamation case: Johnny Depp’s lawsuit against Amber Heard, who had identified publicly as a victim of abuse (implicitly at the hands of Depp). The case was not as cut-and-dried as Jones’ and she lacked a lot of the things that Jones had. The case turned into a ritual public humiliation of Heard — fueled partly by the incentives of social media but also by courts’ utter failure to respond to the way that things like livestreams contributed to the media circus. The worst offenders are already beyond shame, and Defamation claims can really hurt people who have to maintain a reputation.

Up until this point, I’ve almost exclusively addressed Democratic and bipartisan proposals to reform Section 230 because those at least have some shred of substance to them.

Republican-proposed speech reforms are ludicrously, bizarrely bad. Over the past year, we have learned just how bad it has been because of bills passed by Republican legislators in Texas and Florida banning social media moderation because it is being used to ban conservatives from posting on sites such as Facebook.

The First Amendment should render these bans unconstitutional. They are government speech regulations! But while an appeals court blocked Florida’s law, Texas’ Fourth Circuit Court of Appeals threw a wrench in the works with a bizarre surprise decision to uphold its law without explaining its reasoning. Ken white, a legal commentator, called the court’s opinion the most angrily incoherent First Amendment decision he had ever read.

In a blog post last week, Twitter said it had not changed its policies but that its approach to enforcement would rely heavily on de-amplification of violative tweets, something that Twitter already did, according to both the company’s previous statements and Weiss’ Friday tweets. “Freedom of speech,” the blog post stated, “not freedom of reach.”

The First Amendment and Social Science: How Government can help the fight against censorship and erotica in the Internet, or how Facebook and Netflix are doing it

The justices voted against putting the law on hold. (Liberal Justice Elena Kagan did, too, but some have interpreted her vote as a protest against the “shadow docket” where the ruling happened.)

A useful idiot would support Texas and Florida’s laws. The rules are transparently rigged to punish political targets at the expense of basic consistency. They attack “Big Tech” platforms for their power, conveniently ignoring the near-monopolies of other companies like internet service providers, who control the chokepoints letting anyone access those platforms. There is no saving movement that can exempt Disney from speech laws because of its spending power in Florida, and then blow up the entire Copyright system to punish the company for not adhering to the rules.

While they rant about tech platform censorship, politicians are trying to ban children from getting media that supports trans, gay, or gender-non conforming people. On top of getting books pulled from schools and libraries, Republican state delegate in Virginia dug up a rarely used obscenity law to stop Barnes & Noble from selling the graphic memoir Gender Queer and the young adult novel A Court of Mist and Fury — a suit that, in a victory for a functional American court system, was thrown out earlier this year. The panic that affects the LGBTQ Americans doesn’t end there. Even as Texas is trying to stop Facebook from kicking off violent insurrectionists, it’s suing Netflix for distributing the Cannes-screened film Cuties under a constitutionally dubious law against “child erotica.”

There is a tradeoff here: if you read the First Amendment in its broadest possible reading, virtually all software is speech, leaving software-based services impossible to regulate. Airbnb and Amazon have both used Section 230 to defend against claims of providing faulty physical goods and services, an approach that hasn’t always worked but that remains open for companies whose core services have little to do with speech, just software.

The Law of Balk is a bit oversimplified. Internet platforms change us because they reward specific kinds of posts, subjects and linguistic quirks. The internet has a lot of things in it, and is crammed into a few places. And it turns out humanity at scale can be unbelievably ugly. Vicious abuse might come from one person, or it might be spread out into a campaign of threats, lies, or stochastic terrorism involving thousands of different people, none of it quite rising to the level of a viable legal case.

The issue of how and why Twitter — like other major platforms — limits the reach of certain content has been long been a hot button issue on Capitol Hill and among some prominent social media users, especially conservatives. Twitter has repeatedly said it does not moderate content based on its political leaning, but instead enforces its policies equally in an effort to keep users safe. In 2018, founder and then-CEO Jack Dorsey told CNN in an interview that the company does “not look at content with regards to political viewpoint or ideology. We look at what behavior is doing.

“Twitter is working on a software update that will show your true account status, so you know clearly if you’ve been shadowbanned, the reason why and how to appeal,” Musk tweeted on Thursday. He did not provide additional details or a timetable.

Musk’s announcement came just hours after the release of new internal Twitter documents, which again highlighted the practice of limiting the reach of certain, potentially harmful content, a practice that Musk himself has seemingly both endorsed and criticized.

Last month, Musk said that “freedom of speech,” not “freedom of reach,” is what the new policy of his company is all about. “Negative/hate tweets will be max deboosted & demonetized, so no ads or other revenue to Twitter.”

With that announcement, Musk, who has said he now votes Republican, prompted an outcry from some conservatives, who accused him of continuing a practice they opposed. The clash reflects an underlying tension at Twitter under Musk, as the billionaire simultaneously has promised a more maximalist approach to “free speech,” a move cheered by some on the right, while also attempting to reassure advertisers and users that there will still be content moderation guardrails.

Weiss’ tweets follow the first “Twitter Files” drop earlier this month from journalist Matt Taibbi, who shared internal Twitter emails about the company’s decision to temporarily suppress a 2020 New York Post story about Hunter Biden and his laptop, which largely corroborated what was already known about the incident.

Weiss suggested that such actions were taken “all without users’ knowledge.” But Twitter has long been transparent about the fact that it may limit certain content that violates its policies and, in some cases, may apply “strikes” that correspond with suspensions for accounts that break its rules. In the case of strikes, users get notice of their accounts being temporarily suspended.

Comment on “The Facebook Files, Part Duex!” by J. C. Weiss and J. J. P. Flanagano

In both cases, the internal documents appear to have been provided directly to the journalists by Musk’s team. Musk on Friday shared Weiss’ thread and added “The Twitter Files, Part Duex!!” There are two popcorn emojis.

Weiss gave several examples of right leaning figures who had moderation actions taken on their accounts, but it was not clear if they were taken against left leaning or other accounts.

She pointed to the tech case the court will hear Wednesday, in which the justices will consider whether an anti-terrorism law covers internet platforms for their failure to adequate remove terrorism-related conduct. The same law is used by thePlaintiffs in Tuesday’s case.

Tech companies involved in the litigation cite the statute as a part of an argument that they shouldn’t face lawsuits for hosting or suggesting terrorist content.

The law’s central provision holds that websites (and their users) cannot be treated legally as the publishers or speakers of other people’s content. In plain English, that means that any legal responsibility attached to publishing a given piece of content ends with the person or entity that created it, not the platforms on which the content is shared or the users who re-share it.

The executive order faced a number of legal and procedural problems, not least of which was the fact that the FCC is not part of the judicial branch; that it does not regulate social media or content moderation decisions; and that it is an independent agency that, by law, does not take direction from the White House.

The result is a bipartisan hatred for Section 230, even if the two parties cannot agree on why Section 230 is flawed or what policies might appropriately take its place.

The US Supreme Court has an opportunity this term to dictate how far the law can go in changing Section 230 to the courts, because of the deadlock.

Social Media Should not be sued for aiding and abetting terrorist acts: The High Court Benchmark of Twitter v. Taamneh

Tech critics have called for added legal exposure and accountability. “The massive social media industry has grown up largely shielded from the courts and the normal development of a body of law. It is highly irregular for a global industry that wields staggering influence to be protected from judicial inquiry,” wrote the Anti-Defamation League in a Supreme Court brief.

The closely watched Twitter and Google cases carry significant stakes for the wider internet. An expansion of apps and websites’ legal risk for hosting or promoting content could lead to major changes at sites including Facebook, Wikipedia and YouTube, to name a few.

“‘Recommendations’ are the very thing that make Reddit a vibrant place,” wrote the company and several volunteer Reddit moderators. It is users who determine which posts gain fame and which fade into obscurity.

The brief argued that people would stop using the site, and that they would stop volunteering, due to a legal regime that would make it more difficult to be sued for defaming someone.

On Wednesday, the Court will hear Twitter v. Taamneh, which will decide whether social media companies can be sued for aiding and abetting a specific act of international terrorism when the platforms have hosted user content that expresses general support for the group behind the violence without referring to the specific terrorist act in question.

The allegation seeks to carve out content recommendations so they are not protected under Section 230, which could leave tech platforms exposed to more liability.

The Supreme Court took up both cases in October: one at the request of a family that’s suing Google and the other as a preemptive defense filed by Twitter. They’re two of the latest in a long string of suits alleging that websites are legally responsible for failing to remove terrorist propaganda. Section 236 of the law shields companies from liability for hosting illegal content, which has failed a lot of these suits. Two petitions respond to an opinion from the Ninth Circuit Court of Appeals which threw out two terrorism-related suits but allowed a third to proceed.

Twitter has said that just because ISIS happened to use the company’s platform to promote itself does not constitute Twitter’s “knowing” assistance to the terrorist group, and that in any case the company cannot be held liable under the antiterror law because the content at issue in the case was not specific to the attack that killed Alassaf. The Biden administration, in its brief, has agreed with that view.

The Case of Musk and the U.S. Attorney’s Office: How Social Media Companies Make Money from What They Used to Tell Us About ISIS

There are many petitions waiting to have the Texas law reviewed by the Court. The Court last month delayed a decision on whether to hear those cases, asking instead for the Biden administration to submit its views.

The new owner of Twitter will be under scrutiny when it comes to its legal performance. The suit concerns a separate Islamic State attack in Turkey, but like Gonzalez, it concerns whether Twitter provided material aid to terrorists. Before Musk purchased the platform, he had a petition filed to shore up his legal defenses in case the court took up Gonzalez and ruled against him.

Under Schnapper’s interpretation, liking or spreading the word may expose individuals to lawsuits that could not be avoided using Section 230.

He says that if you keep users online longer, social media companies can make more money from it, and that’s because most of the money goes to advertisements.

What’s more, he argues, modern social media company executives knew the dangers of what they were doing. In 2016, he says, they met with high government officials who told them of the dangers posed by ISIS videos, and how they were used for recruitment, propaganda, fundraising, and planning.

The director of national intelligence, as well as the attorney general, FBI director, and then-White House chief of staff were involved. There are government officials. told them exactly that,” he says.

The Biden Administration’s Rule of State and the Case for a New Section 230 Law to Protect Recommendations of User Content

She says that she believes that there is no place for extremists on any of the company’s products or platforms.

Prado acknowledges that social media companies today are nothing like the social media companies of 1996, when the interactive internet was an infant industry. But, she says, if there is to be a change in the law, that is something that should be done by Congress, not the courts.

There are many “strange bedfellows” among the tech company allies. The Chamber of Commerce and the libertarian American Civil Liberties Union are among groups that have filed dozens of briefs asking that the court not change the status quo.

But the Biden administration has a narrower position. Columbia law professor Timothy Wu summarizes the administration’s position this way: “It is one thing to be more passively presenting, even organizing information, but when you cross the line into really recommending content, you leave behind the protections of 230.”

In short, hyperlinks, grouping certain content together, sorting through billions of pieces of data for search engines, that sort of thing is OK, but actually recommending content that shows or urges illegal conduct is another.

If the Supreme Court were to adopt that position, it would be very threatening to the economic model of social media companies today. The tech industry says that it is difficult to distinguish between recommendations and aggregated information.

And it likely would mean that these companies would constantly be defending their conduct in court. But filing suit, and getting over the hurdle of showing enough evidence to justify a trial–those are two different things. The Supreme Court made it very difficult to jump that hurdle. The second case the court hears this week deals with that problem.

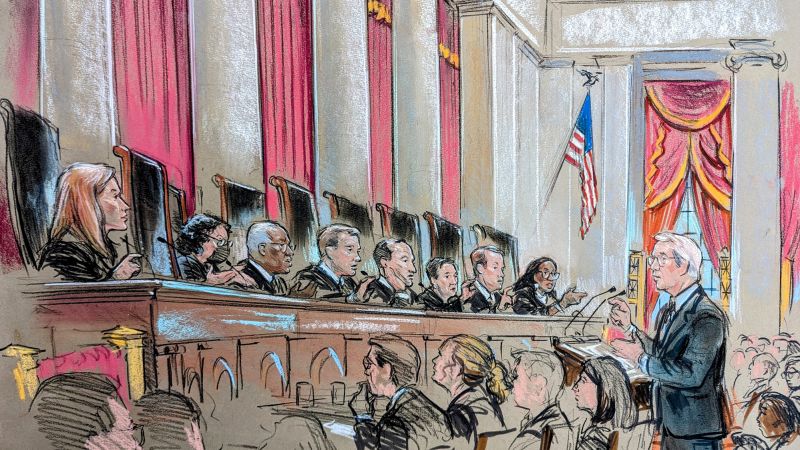

Supreme Court justices appeared broadly concerned Tuesday about the potential unintended consequences of allowing websites to be sued for their automatic recommendations of user content, highlighting the challenges facing attorneys who want to hold Google accountable for suggesting YouTube videos created by terrorist groups.

That line could have important implications for websites trying to avoid litigation by ranking, displaying and promoting content on their websites.

A ruling for Gonzalez wouldn’t have far-reaching effects because most suits would be thrown out anyways, Eric Schnapper argued.

Later, Justice Elena Kagan warned that narrowing Section 230 could lead to a wave of lawsuits, even if many of them would eventually be thrown out, in a line of questioning with US Deputy Solicitor General Malcolm Stewart.

“You are creating a world of lawsuits,” Kagan said. The presentational and prioritization choices can be subject to suit.

Stewart said that he wouldn’t necessarily agree with “there would be lots of lawsuits” because there are a lot of things to sue about, but they were not suits that have much likelihood of prevailing.

The justices pressed Schnapper to explain how the court should treat recommendation algorithms if they recommend a recipe for a pilaf to someone who’s interested in cooking and not interested in terrorism.

Many of the justices were lost about what he was calling for when Schnapper tried to explain how video thumbnail images and videos on YouTube are different.

If they don’t have a focused program with respect to terrorist activities, it might be harder for you to say that you can be held responsible.

Barrett raised the issue again in a question for Justice Department lawyer Stewart. She asked if 230 would not protect in that situation, the logic of your position would be that users will always choose to go somewhere else. Correct?”

Stewart said there was a difference between an individual user making a conscious choice to amplify their content and a system that made decisions on a systemic basis. But Stewart did not provide a clear answer about how he believed changes to Section 230 could affect individual users.

“People have focused on the [Antiterrorism Act], because that’s the one point that’s at issue here. If tech companies ceased to have broad Section 230 immunity, the legal system may flood with other types of claims, Chief Justice John Roberts said.

Justice Samuel Alito posed for Schnapper a scenario in which a competitor of a restaurant would create a video about a violation of health code and then refuse to take the video down despite knowing it was false.

Kagan seized on Alito’s hypothetical later on in the hearing, asking what happens if a platform recommended the false restaurant competitor’s video and called it the greatest video of all time, but didn’t repeat anything about the content of the video.

Even though there was no tough grilling from the justices, they may have differing opinions about the way Section 229 has been interpreted by the courts.

“That the internet never would have gotten off the ground if everybody would have sued was not what Congress was concerned about at the time it enacted this statute,” Jackson said.

The brief was written by Oregon Democratic Senator Ron Wyden and a former California Republican Representative, Chris Cox.

The Supreme Court will not rule against an online search engine, despite a dating site that would not match individuals of different races, or how a decision against YouTube would destroy the internet

If platforms created a search engine that was discriminate, they could be held liable, suggested Justice Sonia Sotomayor in a question for the adversaries of the search engine. She put forth an example of a dating site that wouldn’t match individuals of different races. In her questioning of Blatt, Justice Barrett went back to the hypothetical.

The justices suggested that the tech foes were playing Chicken Little in the way they warned about how a ruling against them would change the internet.

Alito asked if the internet would be destroyed if it was proved that YouTube was liable for posting and refusing to take down videos that were false.

“So if you lose tomorrow, do we even have to reach the Section 230 question here? Would you concede that you would lose on that ground here?” Barrett asked Schnapper.

Nine justices set out Tuesday to determine what the future of the internet would look like if the Supreme Court were to narrow the scope of a law that some believe created the age of modern social media.

That hesitancy, coupled with the fact that the justices were wading for the first time into new territory, suggests the court, in the case at hand, is not likely to issue a sweeping decision with unknown ramifications in one of the most closely watched disputes of the term.

The family sued under a federal law called the Antiterrorism Act of 1990 , which authorizes such lawsuits for injuries “by reason of an act of international terrorism.”

A Time for a Final Remark on Social Media, Artificial Intelligence, and the Internet: Justice Elena Kagan, Chief Justice Alito, and Justice Robertson Roberts

Concerns about Artificial intelligence, artificial intelligence thumbnail pops, endorsements, and even Yelp restaurant reviews were some of the issues raised in oral arguments. At the end of the day, the justices seemed frustrated with the amount of arguments they were hearing and unsure of what the next step would be.

“I’m afraid I’m completely confused by whatever argument you’re making at the present time,” Justice Samuel Alito said early on. “So I guess I’m thoroughly confused,” Justice Ketanji Brown Jackson said at another point. Justice Clarence Thomas said he was confused halfway through the arguments.

Justice Elena Kagan even suggested that Congress step in. “I mean, we’re a court. We don’t know much about these things. You know, these are not like the nine greatest experts on the internet,” she said to laughter.

Chief Justice John Roberts tried to make an analogy with a book seller. He suggested that a book seller sending a reader to a table of books with related content is the same thing that happens when someone recommends certain information.

Justice Elena Kagan made a funny comment early in arguments for a landmark case about Section 230 of the Communications Decency Act. The remark was a nod to many people’s worst fears about the case. The internet could lose core legal protections if Gonzalez succeeds in his case, due to a court that has shown an appetite to overturn legal precedent and reexamining long standing speech law.

The introduction of liability to these software raises a lot of questions. Should Google be punished for returning search results that link to defamation or terrorist content, even if it’s responding to a direct search query for a false statement or a terrorist video? And conversely, is a hypothetical website in the clear if it writes an algorithm designed deliberately around being “in cahoots with ISIS,” as Justice Sonia Sotomayor put it? While it (somewhat surprisingly) didn’t come up in today’s arguments, at least one ruling has found that a site’s design can make it actively discriminatory, regardless of whether the result involves information filled out by users.

One prominent example of this supposedly “biased” enforcement is Facebook’s 2018 decision to ban Alex Jones, host of the right-wing Infowars website who later was slapped with $1.5 billion in damages after harassing the families of the victims of a mass shooting.