What Do Social Media Companies Really Know About Their Children? A Safer Look at How Parents Can Keep Their Children Safe on Twitter, Instagram and TikTok

Better laws could help to revive competition, restrain harmful behavior and even realize the potential of social media to strengthen American democracy rather than undermine it. In short, policymakers can ensure the question of who owns Twitter, or Instagram or TikTok, doesn’t matter quite so much.

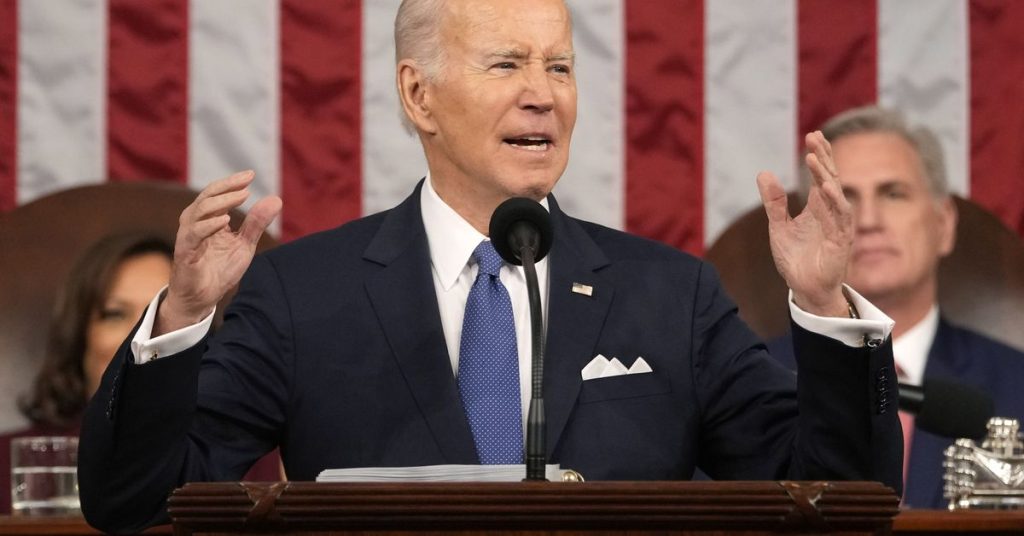

“We must finally hold social media companies accountable for the experiment they are running on our children for profit,” Biden said, as newly minted Speaker Kevin McCarthy, of California, and Vice President Kamala Harris rose to their feet in ovation. Legislation must be passed in order to ban Big Tech from collecting personal data on kids and teenagers online, forbid targeted advertising to children, and impose stricter limits on the personal data these companies collect on us all.

According to the digital security director at the market research firm, social media platforms are offering little to counter their ills. Their solutions, she said, put the onus on guardians to activate various parental controls,such as those intended to filter, block and restrict access, and more passive options, such as monitoring and surveillance tools that run in the background.

For now, guardians must learn how to use the parental controls while also being mindful that teens can often circumvent those tools. We have a closer look at how parents can keep their children safe online.

After the fallout from the leaked documents, Meta-owned Instagram paused its much-criticized plan to release a version of Instagram for kids under age 13 and focused on making its main service safer for young users.

It has since introduced an educational hub for parents with resources, tips and articles from experts on user safety, and rolled out a tool that allows guardians to see how much time their kids spend on Instagram and set time limits. Parents can also receive updates on what accounts their teens follow and the accounts that follow them, and view and be notified if their child makes an update to their privacy and account settings. Parents can see the blocked accounts of their teens. The company also provides video tutorials on how to use the new supervision tools.

Another feature encourages users to take a break from the app, such as suggesting they take a deep breath, write something down, check a to-do list or listen to a song, after a predetermined amount of time. If teens have been staring at any type of content for a long time, and if they have been fixated on any topic for too long, they will be pushed toward different topics, such as architecture and travel destinations.

The parent guide and hub was introduced in order to give parents more information about their teens use of the app, without spilling the beans on who they are talking to. Teens have to consent to use the feature and parents have to create their own account.

TikToks: A Safety Tool to Help Teens Know When to Open a Twitter Account and How to Leave a Comment on It

The company told CNN Business it will continue to build on its safety features and consider feedback from the community, policymakers, safety and mental health advocates, and other experts to improve the tools over time.

In July, TikTok announced new ways to filter out mature or “potentially problematic” videos. The maturity score was used to allocate videos with mature or complex themes. The tool that was rolled out is designed to help people decide how much time they want to spend on TikToks. The tool helps users set screen time breaks and provides a dashboard to show the number of times they opened the app, daytime usage and more.

In addition to parental controls, the app restricts access to some features to younger users, such as Live and direct messaging. Teens can be asked to decide who can watch their first video, when it’s ready to be published. For account users ages 13 to 15 and 16 to 17 Push notifications are not allowed after 10 p.m.

The messaging platform did not show up in the Senate last year, but has faced criticism over difficulty reporting problematic content and ability of strangers to connect with young users.

Still, it’s possible for minors to connect with strangers on public servers or in private chats if the person was invited by someone else in the room or if the channel link is dropped into a public group that the user accessed. By default, all users — including users ages 13 to 17 — can receive friend invitations from anyone in the same server, which then opens up the ability for them to send private messages.

Bipartisan legislation to protect kids from predatory content on big online platforms, as proposed by Biden during the first State of the Union (Augengen 2009)

The biggest tech companies have been able to mark their own work for the past ten years. They’ve protected their power through extensive lobbying while hiding behind the infamous tech industry adage, “Move fast and break things.”

Platforms have to change, and other countries have passed legislation trying to make this possible. Germany has been the first country in Europe to take a stance against hate speech on social networks, with NetzDG requiring platforms with more than two million users to take down illegal content or face a maximum fine of 50 million euro. The Digital Markets Act, which was set out by EU lawmakers in January of 2021, stopped platforms from giving their own products preferential treatment, while the EU AI Act, which was set out by EU lawmakers in July of 2022, involved extensive consultation with civil society organizations. The Nigerian federal government put a new internet code of practice in place to address misinformation and protect children from harmful content.

The Online Safety Bill has been in the works for several years, and effectively places the duty of care for monitoring illegal content onto platforms themselves. It could potentially also impose an obligation on platforms to restrict content that is technically legal but could be considered harmful, which would set a dangerous precedent for free speech and the protection of marginalized groups.

In 2020 and 2021, YouGov and BT (along with the charity I run, Glitch) found that 1.8 million people surveyed said they’d suffered threatening behavior online in the past year. Many members of the LGBTQIA community said that they had experienced racist abuse online, while a small number said that they had not.

“Pass bipartisan legislation to strengthen antitrust enforcement and prevent big online platforms from giving their own products an unfair advantage,” Biden said. “It’s time to pass bipartisan legislation to stop Big Tech from collecting personal data on kids and teenagers online, ban targeted advertising to children, and impose stricter limits on the personal data these companies collect on all of us.”

The address echoed much of what Biden said during his first State of the Union address last year. The child online safety program has always been a problem for Congress and the Biden administration, and reached a crisis point after a Facebook worker leaked internal company documents detailing the dangers of using Meta platforms for young users. Haugen attended the president’s last address as a guest of First Lady Jill Biden, a testament to the administration’s desire for stricter online protections.

Biden touted his administration’s work to bolster US competitiveness against China, leveraging the primetime spot to tout the $52 billion CHIPS and Science Act that included $52 billion in funding to boost US semiconductor manufacturing. Despite the speech’s focus on China, Biden did not comment on whether his administration would ban TikTok.

If you talk to the Republicans in the US House, they will remember that President Joe Biden spoke negatively about them last night. Hell, if you just listened to the State of the Union address, you’d have heard the commander-in-chief heckled as a “liar,” blamed for the opioid epidemic—“It’s your fault!”—or heard him met with a thunderous and sustained Republican “BOOOOOOOOOOO!”

Most of the rowdy Republicans in the US House of Representatives, who now control gavels, television thermostats, and magnetometers, set aside their rowdy ways when Biden addressed their common Silicon foe.

What Happens When the Other Side of the Cone Stopped: The Impact of Social Media on Children’s Self-Effects

Data privacy—a bipartisan concern that’s historically devolved into partisan squabbling and inaction at the end of each congressional session—owned the night. But a popular line in a speech doesn’t mean the US will have a national privacy law anytime in the foreseeable future.

The New Jersey Democrat says that the people on the other side of the aisle stood up is a good sign. It has a very negative impact on their self-esteem, their self-concept and their well-being. I believe that he is correct, as leader of the nation, to make noise about the situation.

“Oh yeah. It’s a big deal,” says Senator Tina Smith, Democrat from Minnesota. We do not really know the impact of social media on kids. The child psychologists and experts I talk to say that it’s so dangerous.