Problems with the ConceptARC Bot in the Human-Computing Apparatus: What Do They Need? Comment on ‘An Empirical Study of Visual Reasoning and Reasoning’ by Melanie Mitchell

Mitchell says that machines are not able to get anywhere near the levels of humans. It was surprising that it could solve some of the problems, because it hadn’t been trained on them.

The leading bot from the contest was not general-ability systems like LLMs, but were designed to solve visual puzzles. They did better than GPT-4, but they did a worse job than people, with the best scoring less than 60 percent in one category.

In May, a team of researchers led by Melanie Mitchell, a computer scientist at the Santa Fe Institute in New Mexico, reported the creation of ConceptARC (A. Moskvichev et al. There are a series of visual puzzles to test the ability of artificial intelligence to comprehend abstract concepts. The puzzles test whether the system has grasped 16 underlying concepts by testing each one in 10 different ways. But ConceptARC addresses just one facet of reasoning and generalization; more tests are needed.

Limitations to the way the test is done probably made it harder for GPT-4. The publicly available version of the LLM can accept only text as an input, so the researchers gave GPT-4 arrays of numbers that represented the images. A number and a blank square are examples. The human participants were able to see the images. “We are comparing a language-only system with humans, who have a highly developed visual system,” says Mitchell. “So it might not be a totally fair comparison.”

OpenAI has created a ‘multimodal’ version of GPT-4 that can accept images as input. Mitchell and her team are waiting for that to become publicly available so they can test ConceptARC on it, although she doesn’t think the multimodal GPT-4 will do much better. She believes that the systems don’t have the same ability to think abstractly and think logically.

Why does a LLM need a human? Human-like theory of mind vs. machine-like reasoning, warns Wortham

Although provocative, the report does not probe the LLM’s capabilities in a systematic way, says Mitchell. She says that it is more like anthropology. Ullman says that to be convinced that a machine has theory of mind, he would need to see evidence of an underlying cognitive process corresponding to human-like theory of mind, and not just that the machine can output the same answers as a person.

Scientists are analyzing the performance of LLMs to see what’s happening. On the other side are researchers who are able to see clues of reasoning when their models do well in some tests. Those that see their unreliability as a sign of the model being dumb are on the other side.

Wortham gives advice to people trying to understand artificial intelligence. He says we glamorize anything that appears to demonstrate intelligence.

“It is a curse, because we can’t think of things which display goal-oriented behaviour in any way other than using human models,” he says. We are suggesting that the reason it’s doing that is because it’s thinking like us.

400 people were enlisted online after the researchers fed the ConceptARC tasks to GPT-4. The humans scored, on average, 91% on all concept groups (and 97% on one); GPT-4 got 33% on one group and less than 30% on all the rest.

The human-like nature of GPT-4: a puzzle-theoretic exploration of human and language learning, with applications in LLMs and AI

For example, to test the concept of sameness, one puzzle requires the solver to keep objects in the pattern that have the same shapes; another to keep objects that are aligned along the same axis. The goal of this was to reduce the chances that an AI system could pass the test without grasping the concepts (see ‘An abstract-thinking test that defeats machines’).

Mitchell and her colleagues made a set of new puzzles that were inspired by one of their favorite programs, but differed in two ways. The ConceptARC tests are easier: Mitchell’s team wanted to ensure the benchmark would not miss progress in machines’ capabilities, even if small. The team chose the different concepts to test and then created a set of puzzles for each concept with their own variations on a theme.

Lake says that the ability to make abstractions from everyday knowledge and apply those to previously unseen problems is a hallmark of human intelligence.

Still, as Bubeck clarifies to Nature, “GPT-4 certainly does not think like a person, and for any capability that it displays, it achieves it in its own way”.

Researchers have also probed LLMs more broadly than through conventional machine benchmarks and human exams. In March Sébastien Bubeck and his colleagues created waves at Microsoft Research with a preprint on artificial general intelligence. Early experiments with GPT-4’. Using an early version of GPT-4, they documented a range of surprising capabilities — many of which were not directly or obviously connected to language. A notable feat was that it could pass tests used by psychologists to assess theory of mind, a core human ability that allows people to predict and reason about the mental states of others. They wrote that they believe that GPT-4 could be seen as an early version of an artificial general intelligence system.

Nick Ryder, a researcher at OpenAI, agrees that performance on one test might not generalize in the way it does for a person who gets the same score. “I don’t think that one should look at an evaluation of a human and a large language model and derive any amount of equivalence,” he says. The Openai scores are not intended to be a statement of human capability or human-like reasoning. It’s meant to be a statement of how the model performs on that task.”

And there is a deeper problem in interpreting what the benchmarks mean. For a person, high scores across these exams would reliably indicate general intelligence — a fuzzy concept, but, according to one definition, one that refers to the ability to perform well across a range of tasks and adapt to different contexts. That is, someone who could do well at the exams can generally be assumed to do well at other cognitive tests and to have grasped certain abstract concepts. Mitchell says that the work done by LLMs is very different than what people do. “Extrapolating in the way that we extrapolate for humans won’t always work for AI systems,” she says.

The key, he says, is to take the LLM outside of its comfort zone. He suggests presenting it with scenarios that are variations on ones the LLM will have seen a lot in its training data. The LLM answers by spitting out words associated with the original question, rather than giving the correct answer to the new scenario.

The questions and training data were checked for similar strings of words by OpenAI. The performance of the LLMs wasn’t different when tested before and after the strings were removed. However, some researchers have questioned whether this test is stringent enough.

A lot of the language models can do very well according to Mitchell. “But often, the conclusion is not that they have surpassed humans in these general capacities, but that the benchmarks are limited.” One challenge is that the models are trained on a lot of text, and so might be able to see similar questions in their training data. This issue is known as contamination.

Do LLMs Have a Test Centred around Deceit? An Empirical Study of GPT-4 and Other Lab-based Language Processing Machines

It’s the kind of game that researchers familiar with LLMs could probably still win, however. He says it would be easy to detect an LLM by taking advantage of the weaknesses of the systems. “If you put me in a situation where you asked me, ‘Am I chatting to an LLM right now?’ I’m pretty sure I would be able to tell you.

Chollet and others, however, are sceptical about using a test centred around deceit as a goal for computer science. “It’s all about trying to deceive the jury,” says Chollet. The test is meant to make it possible for developers to get an Artificial Intelligence to do tricks instead of making useful or interesting capabilities.

When GPT-4 was released in March this year, the firm behind it tested its performance on a series of machines-based tests, such as reading comprehension. Most of them were aced by GPT-4. The company also set GPT-4 around 30 exams, including: various subject-specific tests designed for US high-school students, known as Advanced Placement; an exam to assess the current state of US physicians’ clinical knowledge; and a standard test used in the selection process for US graduate studies, called the GRE. GPT-4 attained a score that was good enough to get it in the top 10% of people in the Uniform Bar Exam, which is a part of the qualifications for lawyers in US states.

Other researchers agree that GPT4 and other LLMs will probably get through the Turing test because they can fool a lot of people. There were more than 1.5 million people who had played the game based on the Turing test, according to researchers at the company. The researchers had told players that if they chatted for two minutes with another player, they’d behave like a person. The researchers noted that the players were not much better than chance, as they were only able to identify just 60% of the time.

Turing proposed a scenario that is called the imitation game, and it was an alternative to the tricky task of defining what it means to think. The person called the interrogator is a person with cell phones and a computer. Turing wondered whether the interrogator could reliably detect the computer — and implied that if they could not, then the computer could be presumed to be thinking. The Turing test was created because of the game and captured the public’s imagination.

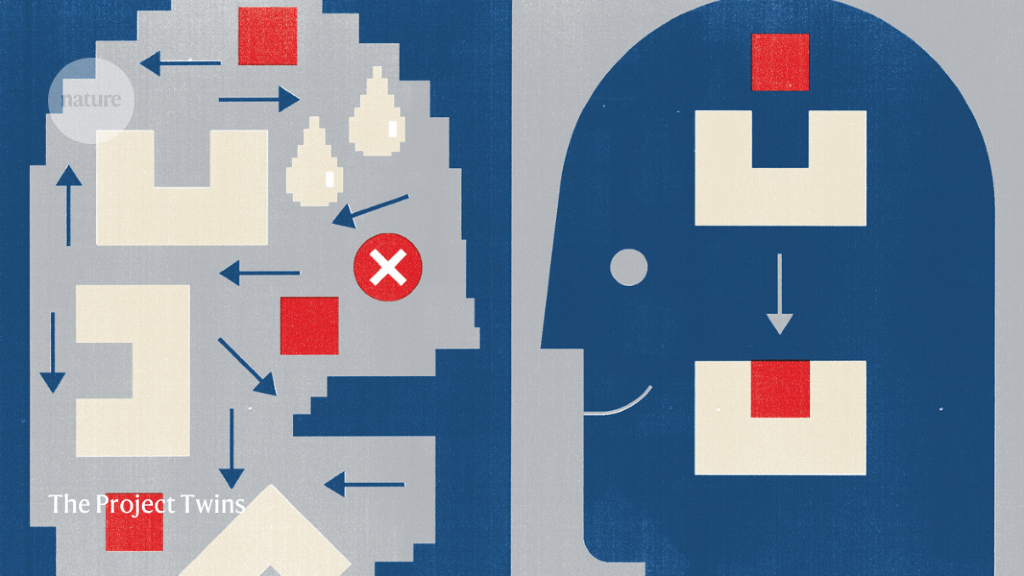

The world’s best artificial intelligence (AI) systems can pass tough exams, write convincingly human essays and chat so fluently that many find their output indistinguishable from people’s. What can they do? Simple visual logic puzzles can be solved.

There are good people on both sides of the debate. The reason for the split is that there isn’t conclusive evidence supporting either opinion. We cannot point at something and say that it is intelligent.

Logic Puzzles: How Do Large Language Models Work, and Why Does Machine Intelligence Go So Well? An Essay by Alan Turing

The rise of these machines has caused both excitement and fear because of their resemblance to humans, as well as a range of other abilities, including essay and poem writing, coding, and passing tough exams. But underlying these impressive achievements is a burning question: how do LLMs work? As with other neural networks, many of the behaviours of LLMs emerge from a training process, rather than being specified by programmers. Many cases the exact reasons why LLMs behave the way they do are not known, even to their own creators.

The team behind the logic puzzles aims to provide a better benchmark for testing the capabilities of AI systems — and to help address a conundrum about large language models (LLMs) such as GPT-4. In one way, they pass through the challenges of machine intelligence that were once considered landmark feats. They seemed less impressive, with glaring blind spots and an inability to reason about abstract concepts.

I would like to think about the question, can machines think? A seminal 1950 paper was written by Alan Turing.

Confidence in a medicine doesn’t just come from observed safety and efficacy in clinical trials, however. Researchers can predict how it will behave in different contexts by understanding the mechanism that causes it. For similar reasons, unravelling the mechanisms that give rise to LLMs’ behaviours — which can be thought of as the underlying ‘neuroscience’ of the models — is also necessary.